Cloud computing refers to the delivery of computing services—including servers, storage, databases, networking, software, analytics, and intelligence—over the internet ("the cloud") to offer faster innovation, flexible resources, and economies of scale.

It allows users to access and use resources as needed, paying only for what they use, much like electricity or water utility services.

This model contrasts with traditional methods of owning and maintaining physical data centers and servers.

Compute Resources

Compute resources refer to the components of a computing system that are responsible for processing data and performing calculations. These resources typically include:

Central Processing Unit (CPU): The CPU is the core component responsible for executing instructions and performing calculations. It is often referred to as the brain of the computer.

Memory (RAM): Random Access Memory (RAM) is used to temporarily store data that is being actively used or processed by the CPU. It is much faster than storage but is volatile, meaning it loses its contents when the computer is turned off.

Storage: Storage refers to the devices used to permanently store data, such as hard disk drives (HDDs) and solid-state drives (SSDs). Unlike RAM, storage retains data even when the computer is powered off.

Network: Network resources enable communication between computers and devices. This includes both physical network infrastructure (such as routers and switches) and the software protocols used to transmit data over networks (such as TCP/IP).

Graphics Processing Unit (GPU): GPUs are specialized processors designed to handle graphics-related tasks, such as rendering images and videos. They are also increasingly used for general-purpose computing tasks, particularly those that can benefit from parallel processing.

Accelerated Processing Unit (APU): APUs combine CPU and GPU components into a single chip, offering both general-purpose processing and graphics capabilities.

Cloud Compute Instances: In cloud computing, compute resources can also refer to virtual machines (VMs) or containers provisioned by cloud providers to run applications. These instances typically include CPU, RAM, and storage, and can be scaled up or down based on demand.

Virtual Machines

A virtual machine (VM) is a software-based emulation(process of mimicking the functionality of one system using a different system) of a physical computer.

It operates like a separate computer system within a host machine, allowing you to run multiple operating systems (OSes) and applications on a single physical machine. Here are some key points about VMs:

Hardware Emulation: VMs simulate computer hardware, including CPU, memory, storage, and network interfaces. This emulation allows them to run OSes and applications that are compatible with the emulated hardware.

Isolation: Each VM is isolated from other VMs and the host system. This isolation provides security and prevents one VM from affecting others if it crashes or becomes compromised.

Resource Allocation: VMs can be configured with specific amounts of CPU cores, memory, and disk space. These resources can be dynamically adjusted to meet the needs of the VM and the applications running on it.

Snapshotting: VMs often support snapshotting, which allows you to save the current state of a VM and revert to it later if needed. This feature is useful for testing, troubleshooting, and ensuring system stability.

Migration: VMs can be migrated from one physical host to another without downtime using live migration techniques. This capability is valuable for load balancing, hardware maintenance, and disaster recovery.

Use Cases: VMs are used for a variety of purposes, including server consolidation, development and testing, software compatibility, and running legacy applications.

Types of VMs: There are different types of VMs, including full virtualization (where the entire hardware stack is emulated), paravirtualization (where some hardware functions are optimized for virtualization), and container-based virtualization (which uses OS-level virtualization for lightweight isolation).

Full Virtualization

A hypervisor is used to create and manage VMs.

Guest OS running on those VM are unaware about that they are virtualized and run unmoidified.

The hypervisor intercepts and translates hardware calls from the guest OS to the underlying physical hardware.

Para Virtualization

Similar to full virutalization with one key difference: Guest OS are aware that they're running in a virutalized environment and have been modified to use a special API provided by the hypervisor.

The API allows the guest OS to communicate more efficiently with the hypervisor, resulting in better performance compared to full virtualisation.

Guest OS requires modification which might be limiting factor for this.

Operating system-level Virtualization (Containerization)

Light-weight form of virtualisation that allows multiple isolated user-space instances, called containers, to run on single OS.

Unlike full and para, containers do not require separate guest OS for each instance.

Virtualization Platforms: Several virtualization platforms are available, including VMware vSphere, Microsoft Hyper-V, and open-source solutions like KVM (Kernel-based Virtual Machine) and VirtualBox.

How virtual machine works?

Virtual machines (VMs) work by virtualizing hardware resources and running multiple operating systems and applications on a single physical machine.

This virtualization process is managed by software known as a hypervisor, which interacts with the physical hardware and separates the physical resources from the virtual environments.

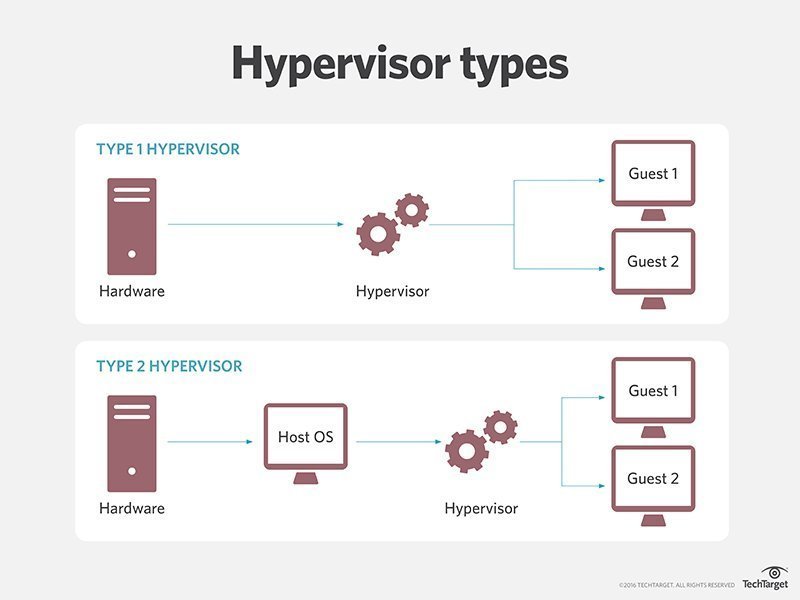

There are two main types of hypervisors:

Type 1 Hypervisor (Bare Metal): This hypervisor runs directly on the host machine's hardware and does not require a base operating system. VMs interact directly with the hypervisor to allocate hardware resources.

Examples of Type 1 hypervisors include VMware ESXi, Microsoft Hyper-V, and KVM (Kernel-based Virtual Machine).

Type 2 Hypervisor (Hosted): This hypervisor runs on top of a host operating system. VM requests are passed to the host operating system, which then provisions the appropriate physical resources to each guest.

Examples of Type 2 hypervisors include VMware Workstation, Oracle VirtualBox, and Parallels Desktop.

The hypervisor is responsible for managing and provisioning resources such as processors, memory, networking, and storage from the host to the guests.

It also schedules operations in VMs to prevent them from overrunning each other when using resources. If a user or program requires additional resources, the hypervisor schedules the request to the physical system's resources so that the VM's operating system and applications can access the shared pool of physical resources.

Why VMs are needed?

Server Consolidation: VMs allow for the efficient use of physical hardware by running multiple virtual servers on a single physical machine. This improves hardware utilization, reduces costs, and saves space and cooling requirements in data centers.

Separate Development and Test Environments: VMs provide isolated environments for testing and development, allowing developers to work without impacting the production infrastructure.

Boosting DevOps: VMs can be easily provisioned, scaled, and adapted, providing flexibility for development and production scale-up as needed.

Disaster Recovery and Business Continuity: VMs can be replicated in cloud environments, providing an extra layer of security and allowing for continuous updates and improvements.

Workload Migration: VMs offer flexibility and portability, making it easier to migrate workloads between different environments.

Creating a Hybrid Environment: VMs enable the creation of hybrid cloud environments, allowing for flexibility without abandoning legacy systems.

Virtual CPU (vCPU)

A vCPU stands for virtual Central Processing Unit and represents a portion or share of the underlying physical CPU that is assigned to a particular virtual machine (VM).

Thread: A thread is a path of execution within a process. Threads within the same process share memory space, while processes run in separate memory spaces. Multiple threads can run parallelly within a process.

Physical Core: A physical core is a processing unit within the CPU that can execute instructions. A CPU may have multiple physical cores.

Logical Core: A logical core allows a single physical core to perform two or more actions simultaneously. This is often achieved through technologies like hyper-threading.

vCPU Calculation

The number of vCPUs can be calculated using the formula:

(Threads x Cores) x Physical CPUs = Number of vCPUs.

For example, if a CPU has 8 cores and can run 16 threads, the calculation would be (16 Threads x 8 Cores) x 1 CPU = 128 vCPUs.

How vCPUs Work?

In a virtualized environment, the hypervisor is responsible for managing and controlling virtual machines (VMs) and their resources. When a VM is created, the hypervisor allocates a portion of the physical CPU's computing resources to a virtual CPU (vCPU) within that VM. This vCPU acts as if it were a physical CPU, handling processing tasks for the VM.

The hypervisor ensures that each vCPU gets its fair share of the physical CPU's resources and manages the scheduling of vCPU tasks to optimize performance and resource utilization across all VMs running on the host machine. This allows multiple VMs to run concurrently on a single physical machine, each with its own vCPUs, effectively sharing the resources of the underlying physical CPU.

While choosing a CPU, notice that generally, higher frequencies indicate better performance, but different CPU designs handle instructions differently, so an older CPU with a higher clock speed can be outperformed by a newer CPU with a lower clock speed because the newer architecture deals with instructions more efficiently.

Most Common CPU Architecture/Processors for Cloud Instances

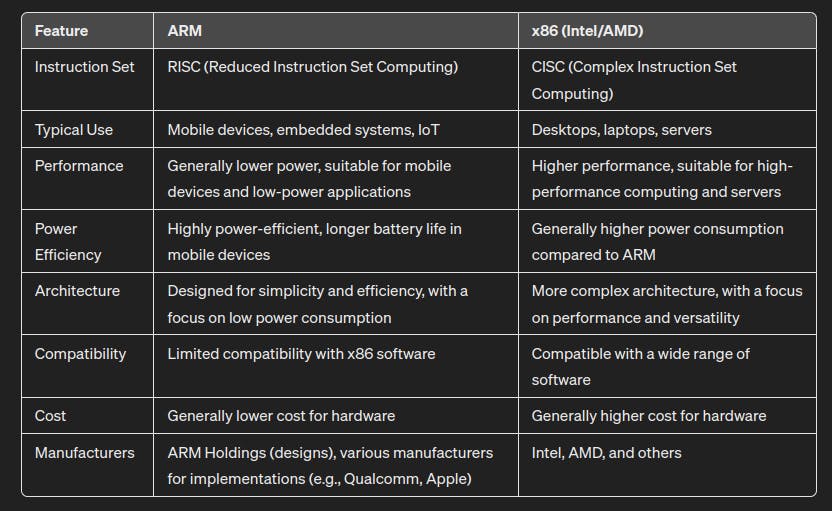

1) ARM processors

ARM stands for Advanced RISC Machine, and RISC stands for Reduced Instruction Set Computing. This means that the CPU has a limited number of instructions it can use. As a result, each instruction runs in a single cycle and the instructions are simpler.

ARM is designed to be smaller, more energy-efficient, and creates less heat. This makes it perfect for mobile devices, like smartphones. The small size makes it great for tiny devices. The energy efficiency gives the device a longer battery life. The lower heat is good for a device that’s constantly being held.

2) *86 processors

x86 is the most widely used instruction set on PCs, and perhaps the one with the most history. With x86 computers, this complex series of operations can be executed in a single cycle. Processing units with this type of instruction set are called complex instruction set computers (CISC)

x86 CPUs tend to have very fast computing power and allow for more clarity or simplicity in the programming and number of instructions, but it comes at the expense of a larger, more expensive chip with a lot of transistors, uses more energy than ARM processors.

ARM Vs *86

Cloud Storage

Data storage

Data storage refers to the process of storing digital information in a storage medium for later retrieval and use. It is essential for computers and other digital devices to store data for various purposes.

Cloud Data Storage

Cloud storage is a cloud computing model that enables storing data and files on the internet through a cloud computing provider that you access either through the public internet or a dedicated private network connection.

The provider securely stores, manages, and maintains the storage servers, infrastructure, and network to ensure you have access to the data when you need it at virtually unlimited scale, and with elastic capacity.

Cloud storage removes the need to buy and manage your own data storage infrastructure, giving you agility, scalability, and durability, with any time, anywhere data access.

How Cloud Storage Works?

Cloud storage is delivered by a cloud services provider that owns and operates data storage capacity by maintaining large data centers in multiple locations around the world.

Cloud storage providers manage capacity, security, and durability to make data accessible to your applications over the internet in a pay- as-you-go model.

Types of Data Storage

1) Object storage

Object storage is a data storage architecture for large stores of unstructured data.

Organizations have to store a massive and growing amount of unstructured data, such as photos, videos, machine learning (ML), sensor data, audio files, and other types of web content, and find scalable, efficient, and affordable ways to store them.

Object storage options include: AWS(Amazon) S3, Azure Blob Storage, Google Cloud Storage

2) File storage

File-based storage, also known as file storage, is a common type of storage used in computing where data is stored in a hierarchical format of folders and files.

This format is easy for users to understand and interact with, as they can request files using unique identifiers such as names, locations, or URLs. This is the predominant human-readable storage format.

Example includes: Amazon Elastic File System (Amazon EFS) and Amazon FSx for Windows / Lustre, Azure Files, GCP Filestore.

3) Block Storage

Block storage is a type of storage that divides storage volumes into individual blocks or chunks. Each block exists independently and has its own unique identifier, which allows for quick storage and retrieval. This type of storage is often used for enterprise applications like databases or ERP systems that require dedicated, low-latency storage for each host.

Block storage is similar to direct-attached storage (DAS) or a storage area network (SAN) in that it provides high-performance, low-latency storage that is directly attached to a specific host or server.

Some examples of cloud storage services that provide block storage include: Amazon Elastic Block Store (EBS), Azure Managed Disks,

Google Cloud Persistent Disks, Google Cloud Solid State Disks (SSDs).

4) Network-attached storage (NAS)

NAS (Network-Attached Storage) device is a storage device that is connected to a network and provides file-based storage services to other devices on the network.

It is essentially a specialized file server that is designed for storing and serving files over a network.

NAS devices are typically dedicated hardware appliances or servers that are specifically designed for storing and serving files over a network. They often include multiple hard drives configured in a RAID array for data redundancy and performance.

Example includes EFS (Elastic File Storage)

Some Key Concepts

1) IOPS (Input/Output Operations Per Second)

- A metric used to measure the performance of storage devices, such as hard disk drives (HDDs), solid-state drives (SSDs), and storage area networks (SANs). It indicates how many input/output operations a storage device can perform in one second.

2) SD-backed Volumes

- SSD-backed volumes are optimized for transactional workloads that involve frequent read/write operations with small I/O sizes. SSDs offer significantly faster and more predictable performance than HDDs, but they are also more expensive.

3) HDD-backed Volumes

- HDD-backed volumes are optimized for large streaming workloads where the dominant performance attribute is throughput (the amount of data transferred per unit of time). HDDs have more limited throughput compared to SSDs but are less expensive.

4) Magnetic Volumes

- Magnetic volumes are backed by magnetic drives (traditional HDDs) and are suited for workloads where data is accessed infrequently and low-cost storage for small volume sizes is important. Magnetic volumes have lower performance compared to SSDs and HDDs but are more cost-effective.

5) Cloud-based Network Function Virtualization (NFV)

- NFV is a technology that virtualizes network services, such as firewalls, load balancers, and VPNs. Instead of using dedicated hardware appliances, NFV allows these functions to be implemented in software and run on standard servers.

6) Cloud-based Network and Application Performance Management (NPM/APM):

- NPM and APM tools are used to monitor and optimize the performance of networks and applications running in the cloud. NPM focuses on monitoring network performance metrics such as bandwidth utilization, latency, and packet loss. APM, on the other hand, focuses on monitoring the performance and availability of applications, including response times, error rates, and user interactions.

7) Cloud-based Software Defined Networking (SDN)

SDN is an approach to network management that separates the control plane (which determines how data packets should be forwarded) from the data plane (also known as the forwarding plane, responsible for actually forwarding the data packets based on the decisions made by the control plane).

In traditional networks, both control and data functions are tightly integrated into network devices (routers, switches).

SDN decouples the control plane from the data plane.

In an SDN architecture, the control plane is implemented in software, known as the SDN controller, which is separate from the network devices. The SDN controller communicates with the network devices using a standardized protocol, such as OpenFlow, to configure and manage the network.